xMapReduce Core Concepts

This guide explains the architecture and design principles of the xMapReduce framework, how it leverages XCENA’s PXL (Parallel Execution Library) for efficient parallel data processing.

What is MapReduce?

MapReduce is a programming model designed for processing large datasets across distributed systems. It was originally developed by Google and has become a standard approach for parallel computing. The model takes its name from two main operations:

- Map: Process each piece of input data independently

- Reduce: Combine intermediate results into final outputs

This approach makes it possible to handle datasets that are too large for a single computer to process efficiently.

xMapReduce Architecture

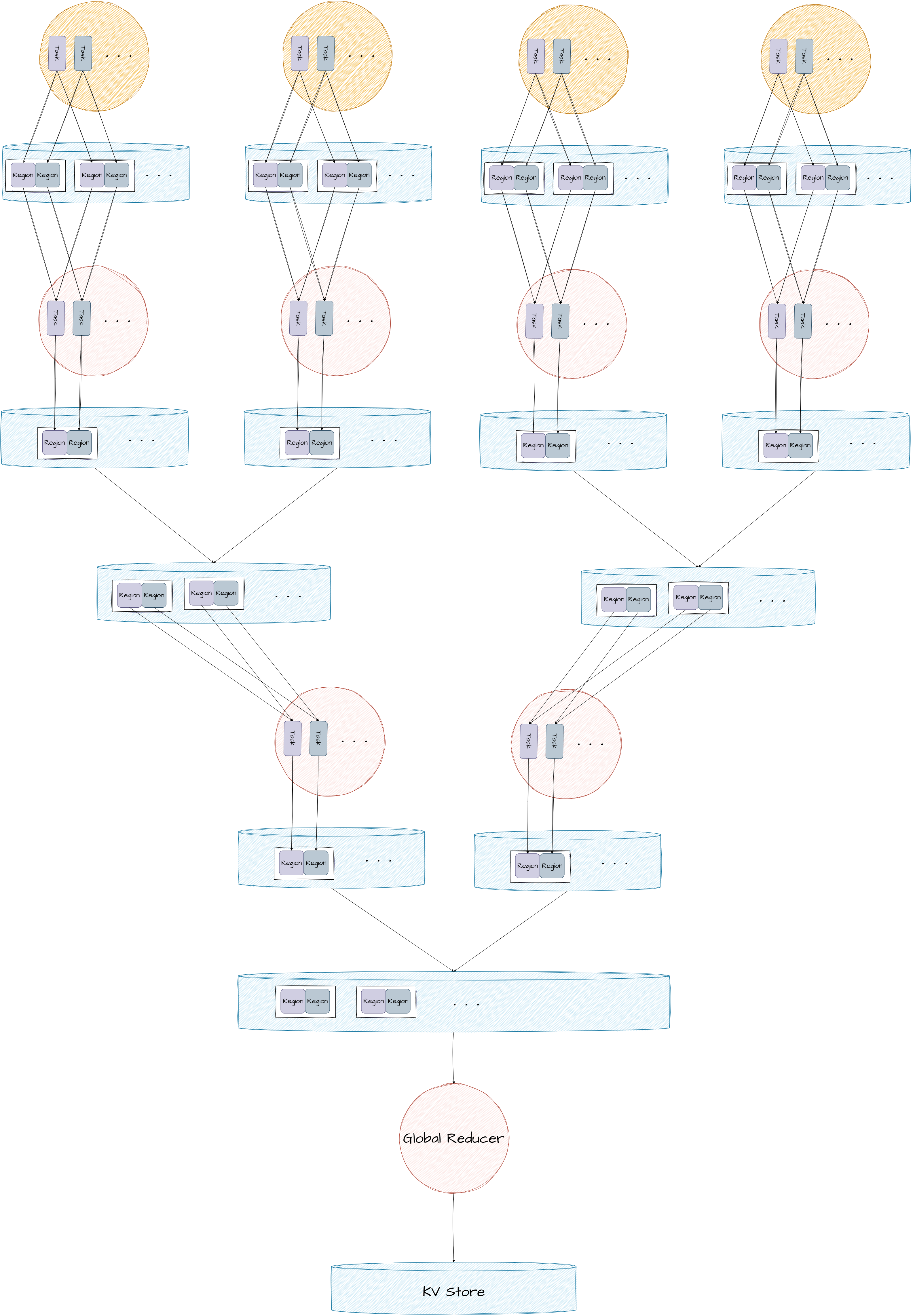

xMapReduce implements the MapReduce model using a three-phase process:

- Map Phase: Input data is divided into chunks and processed by multiple mappers in parallel

- Local Reduce Phase: Mapped data is processed by reducers that perform local aggregation

- Global Reduce Phase: Results from local reducers are combined to produce the final output

Key Components

MapReduce Class

The central component that orchestrates the entire pipeline:

- Manages parallel execution of map and reduce operations

- Handles data distribution and collection

- Controls memory allocation through KVStoreManager

- Supports execution on both host CPU and XCENA accelerator

// Example of creating and using a MapReduce instance

// Method 1: Direct constructor

auto mapReduce = std::make_unique<xmapreduce::MapReduce<MyMapper, MyReducer>>(numMapTasks, numReduceTasks, resultCapacity);

mapReduce->initialize();

mapReduce->execute(inputData, dataSize);

// Method 2: Builder pattern (newer implementation)

auto mapReduce = xmapreduce::MapReduce<MyMapper, MyReducer>::builder()

.numMaps(8)

.numReduces(4)

.numTasks(2)

.resultCapacity(1024)

.build();

mapReduce->execute(inputData, dataSize);

Mapper Interface

Base interface for implementing custom mapping logic:

class MyMapper : public xmapreduce::Mapper<InputType, xmapreduce::KeyValuePair<KeyType, ValueType>>

{

public:

void mapImpl(InputType* data, int count, xkvstore::KVStore<KeyType, ValueType>* kvStore) override

{

// Map implementation

for (int i = 0; i < count; i++) {

// Process data[i] and emit key-value pairs

kvStore->insert(key, value);

}

}

};

// Register mapper for dynamic creation at runtime

REGISTER_MAPPER(MyMapper);

Reducer Interface

Base interface for implementing custom reduction logic:

class MyReducer : public xmapreduce::Reducer<xmapreduce::KeyValuePair<KeyType, ValueType>, xmapreduce::KeyValuePair<KeyType, ValueType>>

{

public:

void reduceImpl(xkvstore::KVStore<KeyType, ValueType>* input, xkvstore::KVStore<KeyType, ValueType>* output) override

{

// Reduce implementation

// Process all values for each key and emit results

std::unordered_map<KeyType, ValueType> aggregate;

for (const auto& kv : *input)

{

aggregate[kv.getKey()] += kv.getValue();

}

for (const auto& [key, value] : aggregate)

{

output->insert(key, value);

}

}

};

// Register reducer for dynamic creation at runtime

REGISTER_REDUCER(MyReducer);

KVStore

Container for key-value pairs with efficient parallel access:

- Supports concurrent insertion from multiple tasks

- Contains multiple blocks for dynamic memory expansion

- Each block divided into regions for task-specific access

- Provides iterators for traversing stored data

- Manages memory allocations through the KVStoreManager

Block and Region Memory Structure

For efficient parallel memory management, xMapReduce uses a block-based approach:

- Block: Basic memory allocation unit containing multiple regions

- Region: Task-specific memory area within a block

This structure prevents memory conflicts during parallel execution and enables efficient memory expansion when needed.

Execution Flow

1. Initialization

When a MapReduce job is initialized:

- The MapReduce object is created with mapper and reducer types

- PXL runtime is initialized for parallel execution

- KVStoreManager creates KVStores for map and reduce phases

- Memory blocks and regions are allocated

- MapReduce registers the mapper and reducer with the registry system

2. Map Execution

During the map phase:

- Input data is divided among map tasks

- Each mapper processes its assigned data chunk in parallel

- Mappers emit key-value pairs into the map KVStore

- If a region becomes full, a new block is automatically allocated

3. Local Reduce Execution

During the local reduce phase:

- Map results are grouped by key

- Each reducer processes values for its assigned keys

- Reducers emit aggregated results to the local reduce KVStore

4. Global Reduce

After local reduction:

- Results from all local reducers are combined using KVStoreManager

- Final aggregation is performed across all intermediate results

- Results are made available through the result KVStore and can be retrieved with

getResultKVStore()

Memory Management

xMapReduce employs a block and region-based memory allocation system:

Block Allocation

When a KVStore needs more memory:

- Initial insert attempt may fail if the region is full

- KVStoreManager is notified to allocate a new block

- New block is created with multiple regions

- Block is added to the KVStore’s block list

- The failed insert operation is retried

This dynamic block allocation enables efficient handling of varying data sizes without manual memory management.

Common MapReduce Patterns

Several common patterns emerge in MapReduce applications:

- Filtering: Mappers emit only items meeting certain criteria

- Counting: Count occurrences of items (like in word count)

- Summation: Aggregate numerical values

- Averaging: Calculate averages for grouped data

- Indexing: Create indexes or inverted indexes for data retrieval

When to Use xMapReduce

xMapReduce works well for tasks that:

- Can be broken into independent sub-problems

- Involve processing each record in a similar way

- Require aggregating or combining intermediate results

- Benefit from parallel processing

For more examples, see the Examples page.